Accelerate Your Machine Learning with Snap: A New Approach

Written on

Introduction to Snap

Machine learning enables us to automate processes and uncover hidden patterns within data. A key factor in this endeavor is ensuring accuracy while training models. However, it's essential to recognize that training large datasets can be time-consuming. In addition to the model's effectiveness, the speed at which we can train is critically important.

At present, Scikit-Learn is the predominant library used for machine learning applications. Yet, as many have experienced, it can be sluggish with extensive datasets. Fortunately, Snap is here to revolutionize your training experience with remarkable speed.

In this article, we'll explore how to utilize Snap for training models on large datasets. If you're already familiar with Scikit-Learn, you'll find Snap incredibly user-friendly. Let’s dive in!

Data Source Overview

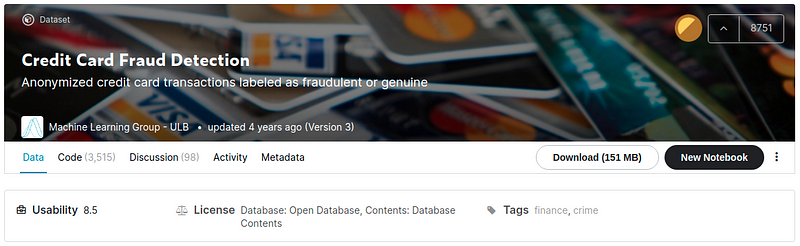

For our data source, we will utilize a dataset from Kaggle, specifically designed for a competition focused on Credit Card Fraud Detection. This dataset consists of over 284,000 transactions recorded in September 2013, with 492 of those identified as fraudulent.

To maintain privacy, the dataset contains minimal identifiable information, primarily related to transaction amounts. You can access further details about the dataset through the provided link.

The Screenshot is captured by the author.

To download the dataset, use the Kaggle API, ensuring you have the necessary API Key for access. Below is the code snippet to facilitate this process:

# Code for downloading dataset from Kaggle

Preparing the Data

Once we have the dataset, our next step is to load it into a DataFrame. First, we need to unzip the file, which can be accomplished with the following code:

# Code for unzipping the file

Next, we will load the dataset into our program with this code:

# Code for loading the dataset

Following that, we will extract the input (X) and the output (Y) from the dataset using the code below:

# Code for extracting input and output

We will then split the dataset into training and testing sets. The train_test_split function from Scikit-Learn can be employed for this purpose:

# Code for splitting the dataset

To ensure no single feature dominates the others, we need to normalize the dataset using the following code:

# Code for normalizing the dataset

Training the Model

After preparing the dataset, we can proceed to model training. Initially, I will demonstrate how to train the model using the Scikit-Learn library:

# Code for training the model with Scikit-Learn

In summary, the modeling process involves initializing the decision tree model, fitting it with our training data, predicting the test data, and finally calculating the accuracy. The initial model achieved an impressive accuracy rate of 99.8%. However, the training time was approximately 29.77 seconds, which is decent but can be improved with Snap.

Before we can leverage Snap, we need to install the library using the following command:

# Code for installing Snap

Now that we have Snap installed, let's implement the training code. You might be surprised to see how similar it is:

# Code for training the model with Snap

Notice the minimal differences? While the printed outputs may vary, the core difference lies in the import statements. The first snippet utilizes Scikit-Learn, whereas the second imports from Snap. The rest of the code remains largely unchanged.

What about the performance? To our delight, the model trained with Snap achieved a slightly higher accuracy of 99.9% and took only about 1.105 seconds to train. That’s nearly 30 times faster than using Scikit-Learn! Isn’t that impressive? Snap allows for rapid model training, especially when dealing with large datasets.

Conclusion

Now that you've been introduced to the Snap library, it's clear that it can significantly reduce training times without sacrificing model accuracy. I hope this resource aids you in building models on large datasets more efficiently than ever.

Thank you for taking the time to read this article!

References

Snap ML, IBM Research Zurich

IBM Research - Zurich, Snap Machine Learning Library provides high-speed training of popular machine learning models…

www.zurich.ibm.com

Want to Connect?

Reach me out on LinkedIn.