Unleashing Your Own AI Assistant: From Concept to Creation

Written on

Introduction to AI Assistants

Have you ever imagined having a personal AI assistant similar to Tony Stark's J.A.R.V.I.S. or Iron Man's F.R.I.D.A.Y. to help manage your tasks, answer queries, and provide companionship? Fortunately, this once sci-fi fantasy is now within your grasp! In this guide, I'll walk you through the process of creating your own AI assistant utilizing Python with FastAPI, ReactJS, and the robust GPT-3 language model.

As we proceed, you'll witness a live demonstration of the AI assistant, along with an exploration of its system architecture. I'll explain how both the backend and frontend operate, ensuring you grasp the inner workings of the project. Additionally, I'll share the open-source code available on GitHub to kickstart your journey. Whether you're an experienced developer or a curious novice, you'll find it easy to craft your own AI assistant.

The Journey: Transforming an Idea into a Functional Web App

In my previous article titled "Creating Your Own AI-Powered Second Brain," I delved into building an AI-powered second brain using Python and ChatGPT. This project successfully organized and retained information based on user-provided context. The positive engagement indicated that many of you found it intriguing.

Now, we elevate this concept by constructing a personal AI assistant capable of conversing, listening, and responding to inquiries in natural language. With the advanced capabilities of GPT-3 and web scraping, this assistant can provide even richer insights beyond the initial context.

Are you ready to transform the way you work and live? Let's dive in!

Demonstration: Experience the AI Assistant in Action

Explaining the functionality here can be challenging, so I’ll first outline the steps involved, followed by a GIF and a YouTube video for a complete demonstration. Here’s how it works:

- Hover over the “Say Something” button.

- The recording will commence.

- Pose your question towards the microphone.

- Move your mouse away from the button, and voila!

- Your AI assistant will deliver the answer through the speakers, and you'll see a text transcript of the interaction on the UI.

To truly appreciate the experience, check out the brief 50-second YouTube video below.

A quick overview of the AI assistant in action, showcasing its capabilities and user interface.

If you prefer a different format, here's a GIF demonstrating the personal assistant web app's functionality:

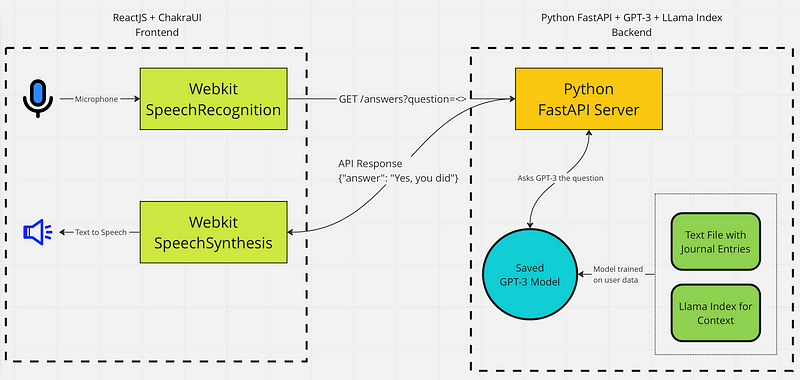

Behind the Scenes: Architecture and System Design

Now, let’s delve into the technical aspects. The system can be broken down into several components:

- GPT-3 serves as the Large Language Model (LLM).

- Llama-Index is used to vectorize context data and relay it to GPT-3.

- A Python FastAPI server facilitates interaction with the trained LLM model.

- ReactJS and ChakraUI are utilized for the frontend interface.

- Webkit SpeechRecognition library enables voice input.

- Webkit SpeechSynthesisUtterance library is employed for text-to-speech functionalities.

When combined, these elements create a cohesive system.

Taking a closer look at both the frontend and backend will deepen your understanding of how this system operates.

The Backend: How Python FastAPI and GPT-3 Drive the Assistant

Recently, ChatGPT has made waves by assisting with a plethora of tasks, ranging from coding to generating art. However, it struggles with personalized inquiries, such as, “What did I eat yesterday?” or “Who did I meet last week?” For it to assist you effectively, it needs access to your personal data.

This is where our framework comes into play:

- A text file containing your journal entries.

- Llama Index processes this text file, vectorizes the data, and provides it as context to GPT-3.

Together, these components equip GPT-3 to respond to queries about your personal history. However, remember, this isn't magic—your data must already exist in the journal for it to be useful.

Here's an example of how to set up the GPT-3 model:

from llama_index import GPTSimpleVectorIndex, SimpleDirectoryReader

# Load data from the journal text file

documents = SimpleDirectoryReader("./data").load_data()

# Create a simple vector index

index = GPTSimpleVectorIndex(documents)

index.save_to_disk("generated_index.json")

# Interactive loop for user queries

while True:

query = input("Ask a question: ")

if not query:

print("Goodbye")

break

result = index.query(query)

print(result)

Next, establish a simple FastAPI endpoint to interact with the saved model:

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

from llama_index import GPTSimpleVectorIndex

app = FastAPI()

# Define allowed origins

origins = [

]

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

@app.get("/answers")

async def get_answer(question: str):

index = GPTSimpleVectorIndex.load_from_disk("generated_index.json")

answer = index.query(question)

return {"answer": answer.response}

With this setup, the client can retrieve and present the data back to the user.

The Frontend: Bringing the AI Assistant to Life with ReactJS

The user interacts with the web app, which serves four primary functions:

- Capture the user’s spoken question and convert it to text.

- Forward the question to the server via an API call.

- Transform the server's textual response into speech, delivering it through the user’s speakers.

- Display the transcript during speech-to-text and text-to-speech processes.

Here's how to implement the frontend using ReactJS:

import React, { useState, useEffect } from "react";

import { Button, VStack, Center, Heading, Box, Text } from "@chakra-ui/react";

function App() {

const [transcript, setTranscript] = useState("");

const [answer, setAnswer] = useState("");

const [isRecording, setIsRecording] = useState(false);

const [buttonText, setButtonText] = useState("Say Something");

const [recognitionInstance, setRecognitionInstance] = useState(null);

useEffect(() => {

const recognition = new window.webkitSpeechRecognition();

recognition.continuous = true;

recognition.interimResults = true;

recognition.lang = "en-US";

recognition.onresult = (event) => {

let interimTranscript = "";

let finalTranscript = "";

for (let i = event.resultIndex; i < event.results.length; i++) {

const transcript = event.results[i][0].transcript;

if (event.results[i].isFinal) {

finalTranscript += transcript + " ";} else {

interimTranscript += transcript;}

}

setTranscript(finalTranscript);

};

setRecognitionInstance(recognition);

}, []);

const recordAudio = () => {

setAnswer("");

setButtonText("Recording...");

setIsRecording(!isRecording);

recognitionInstance.start();

};

const stopAudio = async () => {

setButtonText("Say Something");

setIsRecording(!isRecording);

recognitionInstance.stop();

const response = await fetch(

http://127.0.0.1:8000/answers?question=${transcript});

const data = await response.json();

setAnswer(data["answer"]);

const utterance = new SpeechSynthesisUtterance(data["answer"]);

window.speechSynthesis.speak(utterance);

};

return (

<Box

bg="black"

h="100vh"

display="flex"

justifyContent="center"

alignItems="center"

padding="20px"

>

<Center>

<VStack spacing={12}>

<Heading color="red.500" fontSize="8xl">

? I am your personal assistant ?</Heading>

<Button

colorScheme="red"

width="300px"

height="150px"

onMouseOver={recordAudio}

onMouseLeave={stopAudio}

fontSize="3xl"

>

{buttonText}</Button>

{transcript && (

<Text color="whiteAlpha.500" fontSize="2xl">

Question: {transcript}</Text>

)}

{answer && (

<Text color="white" fontSize="3xl">

<b>Answer:</b> {answer}</Text>

)}

</VStack>

</Center>

</Box>

);

}

export default App;

While there are advanced libraries available for generating stunning AI voices, I opted for simplicity using built-in Webkit libraries.

Get Started: Build Your Personal AI Assistant Today!

If you’ve made it this far, thank you for your interest! I hope you found this guide informative and inspiring. The source code is available on my GitHub repository. If you're comfortable with coding, I encourage you to clone it and embark on your journey to create your personal AI assistant.

For optimal performance, consider exporting data from your task manager and calendar into your text file. I trained my model using ClickUp and Google Calendar data, which turned out to be incredibly beneficial!

I’m eager to hear your thoughts and experiences. Thank you for reading!

If you found this useful, please consider expressing your appreciation and following me on Medium.

Level Up Coding

Thank you for being part of our community! Before you leave, remember to clap for the story and follow the author. Explore more content in the Level Up Coding publication, and don't miss out on our free coding interview course! Follow us on Twitter, LinkedIn, and our Newsletter for updates.