The Future of Computing: Why Analog Technology is Making a Comeback

Written on

Chapter 1: Understanding Analog Computing

Analog computing is set to transform the tech landscape! From advancements in machine learning to more efficient processors, this approach promises unmatched speed and capabilities. It's time to look toward the future!

Most contemporary devices, such as laptops and smartphones, operate using a binary system of ones and zeros, known as digital computing. In contrast, analog computing handles information through continuously varying signals. The resurgence of analog computers is noteworthy; despite being ancient technology, their return is gaining momentum. Why, after all this time, are they back in the spotlight?

Analog Computers Could Revolutionize AI Technology. (Credit: MIT News)

What Exactly is an Analog Computer?

The fundamental concept behind an analog computer lies in its ability to conduct calculations using a continuous signal that represents a measurement. For instance, the speed of a ball can be depicted through voltage within such a system. Analog computers have been around for thousands of years, employing mechanical motion for calculations. A historical example is the Antikythera Mechanism, an intricate device crafted by the Ancient Greeks over two millennia ago to monitor planetary movements. Its complexity was unmatched for another millennium.

The Antikythera Mechanism, a 2000-year-old Analog Computer. (Credit: Atlas Obscura)

Over the last few centuries, various forms of analog computers have emerged, from slide rules for logarithmic calculations to Lord Kelvin’s tide-predicting apparatus. These mechanical devices even played crucial roles in determining when and where to fire naval cannons during World Wars. However, as digital computers gained prominence, analog systems gradually fell out of favor.

What Advantages Do They Offer?

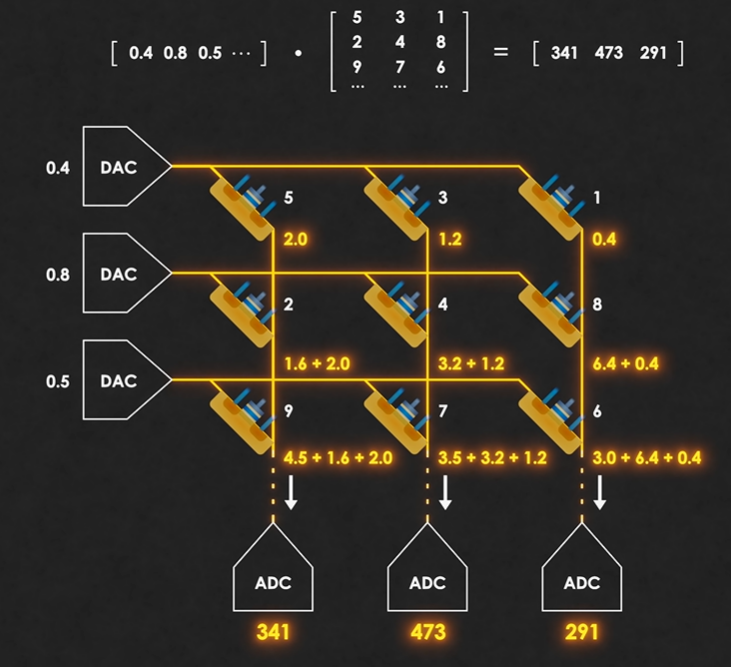

Currently, the landscape is shifting as analog computers are making a resurgence. They possess distinct advantages over their digital counterparts. Analog computers excel at handling complex calculations involving vast amounts of data, a capability vital for machine learning, despite seeming less relevant in everyday scenarios. Integrating dedicated analog chips into digital systems could create a hybrid that enhances AI technology, making it faster and smarter.

A Diagram of How Analog Computers Could Perform Complex Calculations. (Credit: Veritasium)

Moreover, analog computers are more energy-efficient. Instead of consuming significant power to alternate between binary states, they leverage minor fluctuations in voltage and current, resulting in processors that use a fraction of the energy compared to digital systems.

Benefits of Analog Computers

- Reduced Power Consumption

- Faster Processing for Certain Tasks

- Enhanced AI Models

Potential Challenges

Despite their promising benefits, numerous hurdles must be addressed before analog computing can become mainstream. Current companies, like IBM and the startup Mythic, are primarily focused on minimizing energy consumption for machine learning, rather than utilizing analog computing for calculations.

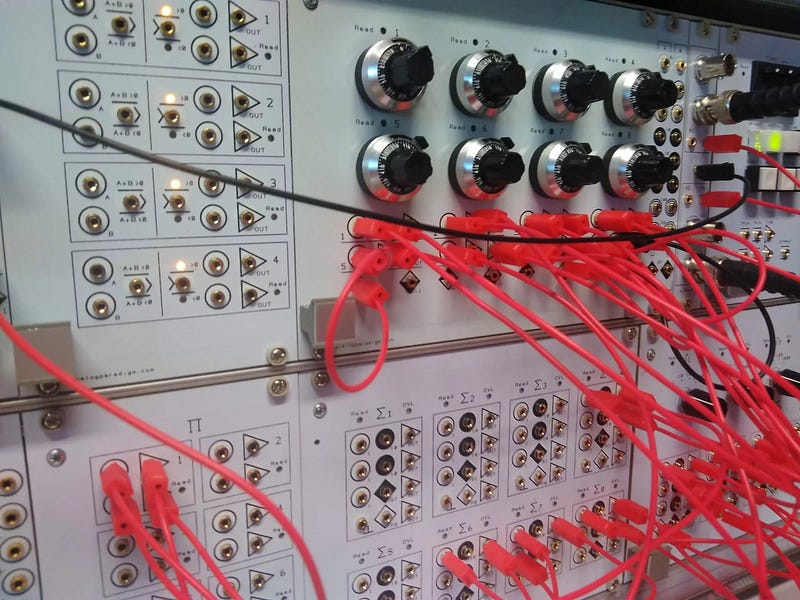

An Analog Computer System. (Credit: Bernd Ulmann)

Even if analog computing does become prevalent, fundamental challenges will impact its application. A significant obstacle lies in programming. Since analog computers operate differently than traditional systems, conventional programming constructs, such as loops and conditional statements, will not apply. Instead, users must learn to connect basic components and measure voltage and current to perform calculations effectively.

Disadvantages of Analog Computers

- Limited to Specific Tasks

- Requires a Different Programming Approach

- Still in Development Stages

Summary

Ultimately, the revival of analog computing signifies a new chapter in computer science and engineering. It highlights the enduring value of older technologies, suggesting that progress sometimes requires revisiting past innovations. The future of analog computing remains uncertain, yet its potential applications are vast. One thing is clear: the analog revolution is just beginning!

Chapter 2: Insights from the Experts

In this insightful video titled "Why the Future of AI & Computers Will Be Analog," experts discuss the anticipated shift toward analog technology and its implications for the future of AI and computing.

Another compelling video, "Analog computing will take over 30 billion devices by 2040. Wtf does that mean?" explores the potential impact of analog technology on future devices and their functionalities.